|

D-Lib Magazine

May/June 2013

Volume 19, Number 5/6

Table of Contents

Choosing a Sustainable Web Archiving Method: A Comparison of Capture Quality

Gabriella Gray and Scott Martin

UCLA

{gsgray, smartin}@library.ucla.edu

doi:10.1045/may2013-gray

Printer-friendly Version

Abstract

The UCLA Online Campaign Literature Archive has been collecting websites from Los Angeles and California elections since 1998. Over the years the number of websites created for these campaigns has soared while the staff manually capturing the websites has remained constant. By 2012 it became apparent that we would need to find a more sustainable model if we were to continue to archive campaign websites. Our ideal goal was to find an automated tool that could match the high quality captures produced by the Archive's existing labor-intensive manual capture process. The tool we chose to investigate was the California Digital Library's Web Archiving Service (WAS). To test the quality of WAS captures we created a duplicate capture of the June 2012 California election using both WAS and our manual capture and editing processes. We then compared the results from the two captures to measure the relative quality of the two captures. This paper presents the results of our findings and contributes a unique empirical analysis of the quality of websites archived using two divergent web archiving methods and sets of tools.

Keywords: Web Archiving, UCLA Online Campaign Literature Archive, CDL Web Archiving Service

Introduction

The UCLA Online Campaign Literature Archive is a digital collection containing archived websites and digitized printed ephemera from Los Angeles and California elections. The earliest piece of digitized printed material, The Lincoln-Roosevelt Republican League, dates back to 1908. Ninety years later we began adding archived websites to the collection using a variety of desktop web capture programs, supplemented by manual downloading of missed files and direct editing of links and files. (For a full description of the process, see Gray & Martin, 2007.)

In the spring of 2012, a University of California (UC) colleague contacted Archive staff asking: if we had to do it all over again, would we use the same manual capture and editing process and tools we use now, or would we opt for a full service tool like the California Digital Library's University of California Curation Center Web Archiving Service (WAS)? Our response was that in 1998 tools like WAS and Archive-It, which "are service providers that provide technical infrastructure, data storage and training for other organizations" (Niu, 2012, p. 2) were not available, so were not options. But given the advancements in web archiving tools over the past ten years, we were in the midst of re-examining the sustainability of our current model and seriously considering using WAS to capture future elections.

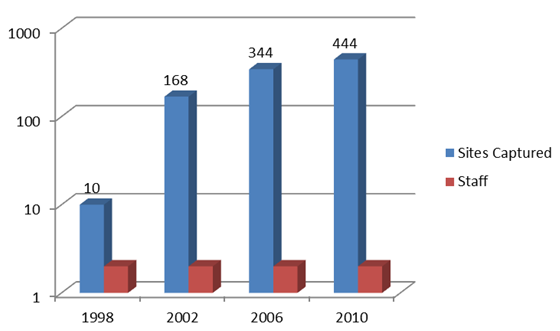

In 1998, when the UCLA Campaign Literature Archive began capturing and archiving election websites, we only found 10 websites from the Los Angeles and California races that fell within the Archive's scope. Twelve years later in 2010 the number of websites created for these campaigns had grown to 444. Conversely, the number of staff (two) collecting and archiving these websites is the same now as it was back in 1998.

Figure 1: Workload and Staffing Changes Over Time (Logarithmic Scale)

As these numbers show, our existing model was becoming unsustainable and we needed to move to a new model if we were to continue capturing and archiving campaign websites. Our reluctance to move away from our existing labor-intensive manual process was rooted in the high quality capture results our method produced. Thus, finding an automated tool that could match, or come close to matching, the quality of our manual captures was the most important element we considered as we evaluated our options.

The logical first choice to investigate was our own UC web archiving tool, WAS. The UCLA Library was already a WAS partner, so we had an existing subscription and were familiar with the tool from our work as one of the curatorial partners on "The Web-at-Risk", the National Digital Information Infrastructure and Preservation Program (NDIIPP) grant funded project that designed WAS. Some thought was also given to whether UCLA should discontinue collecting campaign sites entirely, given the existence of larger projects elsewhere such as the Library of Congress U.S. Elections Web Archives and the Internet Archive Wayback Machine. However, cursory comparisons of UCLA's captured content with those archives revealed that over half of the content being captured by UCLA was not duplicated elsewhere.

Research Project Description

The Web Archiving Service (WAS), which is based on the Heritrix crawler, is essentially a "What You See Is What You Get" (WYSIWYG) tool. WAS includes various limited options which allow curators to adjust the settings used to capture a particular website, but they cannot edit or modify the final capture results. Ultimately the decision as to whether WAS was a viable alternative to our current method would rest on the quality of the captures (the WYG). We decided to empirically test the quality of WAS capture results by creating duplicate captures of the 2012 June California Primary election. We captured 189 websites using WAS and then captured the same 189 websites using our existing manual capture and editing process. We then analyzed and compared the two sets of captures site by site to empirically determine how much content would be lost and/or gained by switching to WAS.

Robots.txt

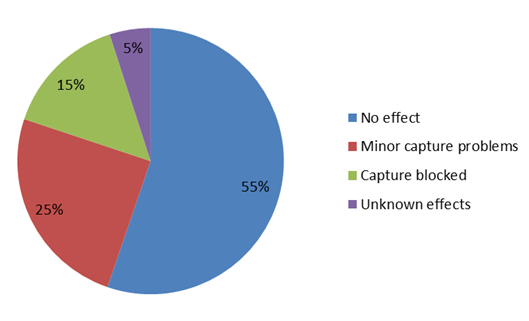

We first considered how WAS handled robots.txt files, which are files placed on web servers that contain instructions for web crawlers. A robots.txt file can block a compliant web crawler such as WAS from capturing some or all parts of a website. Our manual capture tools allowed us to override robots.txt file instructions, an option not available in WAS. Thus we knew the robots.txt setting in WAS would prevent us from fully capturing some number of targeted websites which our manual capture tools enabled us to acquire, but we did not know how many. Therefore, before beginning the actual capture process we analyzed the robots.txt files from a preliminary list of 181 websites and discovered the following results:

- 27 (15%) would have been entirely blocked or resulted in unusable captures. Robots.txt blocked access to whole sites or to key directories required for site navigation.

- 45 (25%) would experience at least minor capture problems such as loss of CSS files, images, or drop-down menus. Robots.txt blocked access to directories containing ancillary file types such as images, CSS, or JavaScript which provided much of the "look and feel" of the site.

- 9 (5%) would have unknown effects on the capture. This case was applied to sites with particularly complicated robots.txt files and/or uncommon directory names where it was not clear what files were located in the blocked directories.

- 100 sites (55%) would have no effect. The robots.txt file was not present, contained no actual blocks, or blocked only specific crawlers.

Figure 2: Estimated Potential Effects of Robots.txt on June 2012 Sites.

The potential loss of 45% of our targeted websites to robots.txt exclusions might have stopped us from considering WAS as a viable alternative, but in January 2012 the Association of Research Libraries (ARL) released the Code of Best Practices in Fair Use for Academic and Research Libraries (Code). The Code is "a clear and easy-to-use statement of fair and reasonable approaches to fair use developed by and for librarians who support academic inquiry and higher education. . . .The Code deals with such common questions in higher education as . . . can libraries archive websites for the use of future students and scholars?" (Association of Research Libraries, 2012).

The release of the Code signaled a shift in the web archiving community's best practices approach to handling robots.txt files. The World Wide Web section of the Code begins with the principle that "It is fair use to create topically based collections of websites and other material from the Internet and to make them available for scholarly use" (Adler et al., 2012, p. 27). It goes on to specifically address robots.txt files:

Claims of fair use relating to material posted with "bot exclusion" headers to ward off automatic harvesting may be stronger when the institution has adopted and follows a consistent policy on this issue, taking into account the possible rationales for collecting Internet material and the nature of the material in question. (Adler et al., 2012, p. 27)

The release of the Code of Best Practices in Fair Use for Academic and Research Libraries provided the WAS team the opportunity and foundation to re-examine its robots.txt principles and practice. The forthcoming release of WAS Version 2.0 will allow curators to override/ignore robots.txt files. Although this feature was not in place at the time of our capture comparison, acting on our request WAS staff manually overrode the robots.txt restrictions for all the captures planned for the June 2012 election. With the overriding of the robots.txt files in WAS, we now had an analogous capture/data set for comparison.

Capture Comparison

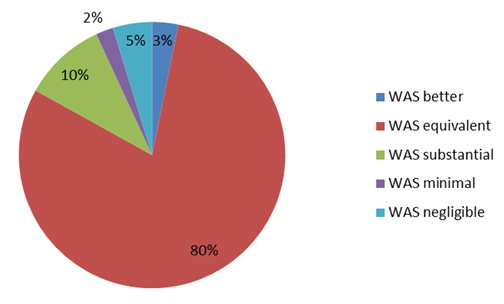

The primary measure of comparison is completeness of the capture. Does the capture include all of the content from the original site and is it reachable from the home page through the captured site's internal links and menus? In order to quantify our results, we defined five categories based on the relative completeness of the two captures. It is important to emphasize that these categories are relative, not absolute: "equivalent" captures may be equivalently bad in cases where both WAS and the manual tools failed to capture anything. Of the 189 sites captured in June 2012:

- 6 sites (3%) were better. The WAS capture was more complete than the local capture, containing significant content which our local software did not get.

- 151 sites (80%) were equivalent. The WAS and local captures were nearly identical in quantity and quality of captured content.

- 19 sites (10%) were substantial. The WAS capture contained the same primary content (pages and text) as the local capture, but was missing some images, CSS files, or other subsidiary files which impacted the look and feel of the site. This category was often the hardest to judge, since it is based on whether missing content is "significant" and essentially overlaps with a judgment call on acceptable loss, which still needs to be more fully defined. (See Next Steps, below)

- 4 sites (2%) were minimal. The WAS capture contained only a fraction of the content contained in the local capture, sometimes as little as a single page, or the captured content could not be displayed by following links.

- 9 sites (5%) were negligible. WAS failed to capture or display any significant content in comparison to the local capture. Of these nine sites, six were Facebook pages, which WAS can only partially capture due to the limitations of the Heritrix crawler to capture AJAX applications.

Figure 3: Comparison of WAS Capture Results With Traditional Capture Results.

The results of the comparison were encouraging. Leaving out the Facebook pages, which were always considered of secondary value to the UCLA Archive, the better category balanced both the minimal and negligible categories, indicating that the core content gathered by the two methods was overall equivalent. (The comparison did, however, highlight the fact that both WAS and the three desktop capture programs we have traditionally used share many of the same weaknesses when dealing with issues like Flash, drop-down menus, and dynamic calendars.)

A closer look at the substantial category also showed that only a few sites were missing core images or style sheets; the majority of the missing content (in comparison to the manual capture method) consisted of high-resolution photos in slideshow galleries. Not only were these considered low-value content, especially when clear mid-size photos were successfully collected, but their capture usually required extensive manual downloading of individual images, exactly the sort of labor-intensive capture process we were hoping to avoid.

One site in particular stood out as an interesting example of the complexities involved with judging capture quality: Dianne Feinstein's 2012 Senate campaign site. Though both systems captured the same amount of content, the site's home page re-directs first-time visitors (based on the lack of a cookie) to a splash page. In our local capture, the script which calls the splash page was manually commented out by direct editing of the archived home page. Since WAS does not allow curators to edit captured content, whenever a user attempts to view the WAS-captured home page (whether by following links to it or simply starting there as the system's default entry point for browsing the captured site), they are re-directed to the splash page on the original website, effectively being "kicked out" of the archive. We thus categorized the WAS capture as negligible, because users cannot get to the captured content without using the WAS search tool to bypass the home page. Though this is exactly the type of high-profile content we would be loath to lose, there is still the possibility that the hidden captured content could be "recovered" by ongoing improvements to the WAS display system and/or providing an alternate entry point to the site.

Conducting the capture comparison gave us the empirical data we needed to make an informed decision as to whether WAS was a viable alternative to our current method. The results of our comparison, that the core content gathered by WAS and our manual capture and editing method was overall equivalent, provided the impetus we needed to officially make the decision to transition to WAS for our web archiving needs.

Next Steps

Moving to WAS necessitates reworking and redesigning our entire workflow, beginning with Quality Assurance (QA). Currently, much of our QA occurs during the capture process itself, as staff can often see problems arising during the crawl, make adjustments on the fly, or cancel and re-try with different settings or software. Afterward we perform a more formal "detailed review of the website [which] involves examining every page, or at least a sufficiently large sample, to ensure that all pages and embedded files were downloaded and that the links between them are functional" (Gray & Martin, 2007, p. 3). When necessary we download missing content and edit link addresses to recreate the look and feel of the original site. Our QA process thus incorporates active measures to both identify and immediately correct problems.

This type of active QA is not possible in the WAS environment, where the captured content cannot be edited, so we will need to shift to a more selective, pre-capture QA process. High-priority campaign sites (such as ballot measures and high profile races) will be identified for test captures before the election, allowing us to refine the capture settings and identify sites for possible manual capture. We will also need to develop more explicit definitions of the substantial and minimal categories which define the line of acceptable loss. These definitions will be used in post-capture review, which will combine manual inspection of captured sites with analysis of WAS log files. Instead of looking for errors to fix, QA will focus on assessing the overall viability of the capture. Captures that fall below our baseline definition of acceptable loss will be removed from WAS and/or captured manually.

As capture tools evolve more attention is being paid to enhancing their quality assurance tools. WAS currently includes QA tools that focus on comparing the results of multiple captures. These tools produce reports that will be especially helpful when analyzing the results of test captures run with different capture settings. Although Version 2.0 of WAS is scheduled to include additional QA reports, one enhancement we would like to see in future WAS releases is something comparable to the National Library of New Zealand and the British Library's Web Curator Tool. Version 1.6 of the Web Curator Tool includes a prune tool which "can be used to delete unwanted material from the harvest or add new material" (National Library of New Zealand & British Library, 2012, p. 47). We would also like the ability to view the results of WAS captures in "offline browsing" mode, allowing us to better evaluate which page elements were captured and which are still being pulled from live sites.

The final challenge will be how to integrate websites archived using WAS with the content in the existing Campaign Literature Archive. The Archive, which is a blend of archived websites and digitized print campaign ephemera, is hosted locally at UCLA by the UCLA Digital Library Program. WAS content is hosted remotely and archived in a California Digital Library preservation repository. WAS Version 2.0 is scheduled to contain an XML Export tool that will enable us to export the metadata records describing the individual WAS captures along with the URLs of the archived websites. These metadata records will be transformed and integrated into our local discovery system. In theory this will provide users with seamless access to the entire collection via the UCLA Digital Library Program's interface. If this method works, we can also continue to manually capture and locally archive a small number of high priority websites that fall into the minimal or negligible categories, such as the Dianne Feinstein site mentioned above.

The hallmark of the UCLA Online Campaign Literature Archive has always been the quality of our archived websites. Based on the results of our capture comparison we are confident that using WAS to archive our websites will not compromise the quality of the Archive's content. Our current method is completely dependent on the expertise of our existing staff. Our new model should be sustainable regardless of the staffing levels and expertise available. If all goes as planned our new method will be a blueprint for integrating websites archived remotely with websites and digitized printed content archived locally, all of which will be discoverable via a single local interface. The UCLA Online Campaign Literature Archive currently chronicles over a century of Los Angeles and California elections. Moving to this new sustainable model will enable the UCLA Library to continue to archive and document the next century.

References

[1] Adler, P. S., Aufderheide, P., Butler, B., & Jaszi, P. (2012). Code of best practices in fair use for academic and research libraries. Washington, D.C.: Association of Research Libraries.

[2] Association of Research Libraries. (2012). Code of best practices in fair use for academic and research libraries: Overview of the Code.

[3] Gray, G., & Martin, S. (2007). The UCLA Online Campaign Literature Archive: A case study. Paper presented at the 7th International Web Archiving Workshop, Vancouver, Canada.

[4] National Library of New Zealand, & British Library. (2012). Web Curator Tool user manual version 1.6.

[5] Niu, J. (2012). Functionalities of web archives. D-Lib Magazine, 18(3/4), http://dx.doi.org/10.1045/march2012-niu2.

About the Authors

|

Gabriella Gray is the librarian for education and applied linguistics at the UCLA Charles E. Young Research Library. She has been the project director of the UCLA Online Campaign Literature Archive since 2002.

|

|

Scott Martin is the computer resource specialist for the Collections, Research, and Instructional Services department in the UCLA Charles E. Young Research Library. He has been the web archivist for the UCLA Campaign Literature Archive since it began collecting websites in 1998.

|

|