|

|

|

| P R I N T E R - F R I E N D L Y F O R M A T | Return to Article |

D-Lib Magazine

July/August 2015

Volume 21, Number 7/8

Developing an Image-Based Classifier for Detecting Poetic Content in Historic Newspaper Collections

Elizabeth Lorang, Leen-Kiat Soh, Maanas Varma Datla, Spencer Kulwicki

University of Nebraska—Lincoln

Point of contact for this article: Elizabeth Lorang, [email protected]

DOI: 10.1045/july2015-lorang

Abstract

The Image Analysis for Archival Discovery (Aida) project team is investigating the use of image analysis to identify poetic content in historic newspapers. The project seeks both to augment the study of literary history by drawing attention to the magnitude of poetry published in newspapers and by making the poetry more readily available for study, as well as to advance work on the use of digital images in facilitating discovery in digital libraries and other digitized collections. We have recently completed the process of training our classifier for identifying poetic content, and as we prepare to move to the deployment stage, we are making available our methods for classification and testing in order to promote further research and discussion. The precision and recall values achieved during the training (90.58%; 79.4%) and testing (74.92%; 61.84%) stages are encouraging. In addition to discussing why such an approach is needed and relevant and situating our project alongside related work, this paper analyzes preliminary results, which support the feasibility and viability of our approach to detecting poetic content in historic newspaper collections.

1 Introduction

We are entering the moment of the image in digital humanities, if the Digital Humanities 2014 conference can be taken as a bellwether.1 At the conference, multiple sessions dealt with digital images, notably their potential for exploring a range of research questions, from fuzzy matching of photographs of people in Broadway productions, to the automatic detection of prints made from the same woodcuts, to the image segmentation of manuscript materials at the level of the line and of character pairs, among others.2 Now, you may be thinking, "The moment of the image? We've been dealing with images since we started digitizing materials for libraries more than 20 years ago." And certainly, in digitizing the cultural record, we have created and continue to create millions and millions of digital images. Our uses of digital images, however, have been quite limited: most typically they allow us to perform transcription or derive machine-generated text, or they stand in to the user as a facsimile representation. As the presentations at Digital Humanities 2014 and other emerging projects dealing with digital images illustrate, however, significant work remains to be done in order to leverage the full information potential of images within the digital humanities and digital libraries communities. 3

The Image Analysis for Archival Discovery project (Aida) seeks to leverage the information potential of digital images for discovery and analysis within the digital libraries and digital humanities communities. We have begun with the challenge of identifying poetic content in historic newspapers through an image-based approach. This work stands to augment the study of literary history by drawing attention to the magnitude of poetry published in newspapers and by making the poetry more readily available for study, as well as to advance work on the use of digital images in facilitating discovery in digital libraries and other digitized collections. This paper describes the first stage of our work to identify poetic content in historic newspapers, the training of a machine learning classifier. This paper is intended both for a broad digital libraries/digital humanities audience interested in the general principles, ideas, and applications of our work, as well as for a more specialized audience interested in specific details of implementation, including our algorithms. A later paper will describe the results and analysis of wide-scale deployment, as we ultimately process more than 7 million page images from Chronicling America, the publicly accessible archive of the United States' National Digital Newspaper Program.4

The early results of our classifier are encouraging; training results indicated 90.58% precision, with a recall of 79.4%. These percentages dropped in the testing stage of creating the classifier, but the results remain encouraging as a proof of concept for using image analysis as a solution for this work. In addition, the process of training the classifier has helped to illuminate potential challenges including those presented by varying qualities of the original newspapers' design and layout and by damage and degradation to the originals over time, as well as those introduced during microfilming and subsequent digitization. Therefore, in addition to exploring image analysis for archival discovery, our present project also explores the affordances and limitations of preservation and access strategies.

2 Why?

Vast digital collections are of limited use if researchers cannot access the materials of interest to them. While this statement may seem banal at first, there in fact remains a critical gulf between the amount of material that is available to researchers and the ability researchers have to find the materials they need within digitized collections of primary materials. Writing from the perspective of literary scholars wanting to do large-scale text analysis, for example, Ted Underwood has put the problem in this way: "Although methods of literary analysis are more fun to discuss, the most challenging part of distant reading may still be locating texts [within digitized collections] in the first place."5 In a similar vein, librarians Jody L. DeRidder and Kathryn G. Matheny have discussed the difficulty of finding items of interest in voluminous digital collections of primary materials; their case study demonstrates that at the same time as the size and number of digital collections are increasing, the interfaces to these collections and expectations for how the collections can be used have remained largely unchanged for twenty years.6

One way in which the basic functionality of digital libraries has stalled is that text nearly always serves as the primary, and most often the only, basis for retrieval and analysis in conventional systems. Such text-based querying and retrieval does not meet all, or even all routine, use cases, and singular focus on text-based retrieval limits the types of questions researchers can imagine and pursue within the collections. Regardless of the questions researchers have, or the materials they seek to study, the standard methods for retrieving information of relevance in digital collections are text-based. Such collections are locked in to, and by, a model that positions text-based querying as normative and fail to imagine additional models of engagement. One way to open up digital collections to new types of questions and modes of discovery is through image analysis.

Image-based methods for discovery and analysis are in development in academic and industry sectors, but a number of challenges remain. One of the most rapidly developing areas in image analysis deals with contemporary digital photographs, such as those shared on Flickr, or with images of primarily visual historical materials.7 There has been far less work in investigating image analysis for primarily textual materials to improve the tasks of detection and examination. In launching the Internet Archive's Book Images Project to "unlock the imagery of the world's books," for example, Kalev H. Leetaru and Robert Miller describe image search as "a tool exclusively for searching the web, rather than other modalities like the printed word."8 Furthermore, while image segmentation is routinely performed as part of optical character recognition (OCR) processes for dealing with textual materials, such image segmentation is done for the purpose of deriving text, not as a means or method of analysis in its own right. For a variety of use cases and research areas, however, visual cues or visual characteristics of texts might be the best path forward for discovery and retrieval in digital collections, and it is this innovative approach that our project advances.

The Aida team's present project to identify poetic content in historic newspapers has both a broad goal to explore new strategies for identifying materials of relevance for researchers within large digital collections, as well as a very specific goal within literary studies. Writing about poetry publishing in Victorian England, Andrew Hobbs and Claire Januszewski have estimated that four million poems were published in local papers during Queen Victoria's reign alone.9 There is almost certainly a similarly vast corpus of poems in early American and U.S. newspapers. To date, the identification of these poems has relied on scholars identifying and indexing these works by hand, and researchers quickly run up against their own limitations for processing the materials. Yet the recovery of these poems is significant because their recovery will cast light on the very narrow histories of poetry presently at the center of much research, instruction, and public perception of poetry. These narrow histories take into account far less than 1% of the poetic record and have been crafted on exclusionary principles—exclusionary to both authors and readers. The task of identifying these poems is a crucial first step to reconfiguring the literary record.

Our approach to achieve both goals is a hybrid image processing and machine learning strategy described in greater detail in the Methods section. This approach is significant in its ability to deal with multi-language corpora and in its potential for generalization. For poetic content, the approach should be able to find verse in Spanish, German, Cherokee, or Yiddish, for example, just as easily as in English.10 Further, within newspapers, image processing and analysis may be used to identify obituaries, birth and marriage announcements, tabular information such as stock reports and sports statistics, and advertisements, among other visually distinct items. For both poetry and these other genres, image analysis can help facilitate tasks to detect as well as to filter information, when there are millions of pages involved. Depending on the use case, image analysis might be fully automatable or function as a capable assistant or first-step filter.

3 Related Work

A diverse array of related work informs the scope, goals, and methods of our current project. Within literary and historical studies, researchers are increasingly turning to large-scale collections of historic newspapers to address a range of research questions. The majority of researchers involved in corpus-scale analysis and data mining have focused on natural language processing techniques (and therefore deal with electronic text). For example, the Viral Texts project is investigating the culture of reprinting among nineteenth-century newspapers and magazines by identifying reused text.11 Other researchers have used topic modeling for uncovering hidden structures in collections of historic newspapers, working from both highly accurate hand-keyed transcriptions and less accurate text generated via optical character recognition.12 Significantly, in "Mining for the Meanings of a Murder: The Impact of OCR Quality on the Use of Digitized Historical Newspapers," Carolyn Strange, Daniel McNamara, Josh Wodak, and Ian Wood found that the presence of "metadata to distinguish between different genres ... had a substantial impact" on their work. While the genres of significance for their research were all prose genres, their study nonetheless suggests the ways in which finer-grained generic distinctions within newspaper corpora may affect analytical work.13 Likewise, Underwood has stated that genre division and identification below the page level are logical next steps for his text-based machine learning approach to genre identification within the Hathi-Trust corpus.14

In computer and information sciences, the automated identification of features such as tables and indices in journals and books has been a research area for many years.15 Researchers in several domains also have used visual features to aid in identifying illustrations in scholarly journals or duplicated pages in book scanning projects.16 Page layout and segmentation analysis is another area of investigation, in which the goal is to identify textual or graphical zones and distinguish among zones of a page. Indeed, page layout and segmentation analysis are active research areas for digitized newspapers and other periodicals. Iuliu Konya and Stefan Eickeler, for example, have attempted to read in an image of a periodical page and output "a complete representation of the document's logical structure, ranging from semantically high-level components to the lowest level components."17 Other work with digital newspaper collections has focused on automatically detecting front pages of newspapers.18 Layout and segmentation analyses, however, do not take the further step of classifying contents within zones, such as by genre.

Our research indicates that Aida is the first project to use image analysis for the purposes of generic identification in historic newspapers. Others are working in similar directions, such as the Visual Page research team, which has been analyzing visual features of "single-author books of poetry published in London between 1860-1880." The project team has adapted Tesseract, an OCR engine, to "recognize visual elements within document page elements and extracted quantifiable measures of those visual features."19 Members of the Visual Page team also received funding in 2014 to investigate applications of image analysis within HathiTrust.20 Related work is thus converging around several subjects: new methods for and approaches to research in historical newspapers; connecting researchers with the materials of relevance to them in large digital collections; and seeking methods beyond text-based approaches, though complementary to them, for discovery and analysis in large digital collections. Aida's current work is at the nexus of these issues.

4 Methods

Our approach is to use supervised machine learning to create a classifier that will be able to identify image snippets as containing or not containing poetic content. Our reason for beginning with a supervised approach is that unsupervised machine learning is better suited for knowledge discovery and data mining, especially when there is not sufficient domain knowledge. But in the present case, we do have domain expertise: we can determine what is a poem and what is not a poem within the newspaper pages. Thus, we can manually label an image snippet very accurately as a poem image or a non-poem image for training purposes. As a result, it is much more straightforward to use a supervised machine learning approach. Therefore, the first stage of our work was to train a classifier to recognize poetic content in newspaper pages in the Chronicling America collection. This required creating a training set of images, determining the features to be analyzed, developing algorithms to describe and compute those features, and implementing a neural network and decision tree for training and testing.

For the initial training, we prepared a test set of 210 training snippets, with 99 snippets containing poetic content and 111 snippets that had no poetic content. In creating the test set, project team members identified newspaper pages within the Chronicling America corpus that represented geographic, temporal, and material diversity. (The "Next Steps" section below details our work to create snippets by automated means for wide-scale deployment.) Each snippet includes only text/graphics from a single column of the page. Although some snippets initially included lines marking the break between columns, we later removed these lines as they negatively affected the accuracy of the classifier. Snippets were all prepared at the same size, 180 pixels wide by 288 pixels tall, at 72ppi.

Our method then was to perform a series of pre-processing steps on each image snippet to reduce noise. Pre-processing creates better images for feature extraction. On each snippet, we run a series of blurring, binarization, and consolidation algorithms to convert a regular image to a black and white version in order for easier measurement and extraction of visual cues, as represented in the following algorithm:

Algorithm: Pre-Processing

Input: an original image, ioriginal, which is a snippet of a newspaper page

Output: a processed image, isolid, that is ready for feature extraction

1. Perform blurring on ioriginal to obtain iblurred

2. Perform bi-Gaussian binarization on iblurred to obtain ibinary

3. Perform further pixel consolidation on ibinary to obtain isolid

End Algorithm

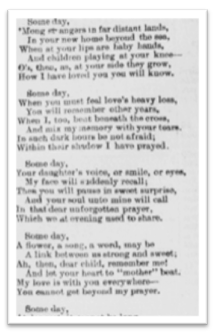

For blurring, we use a grid averaging algorithm to compute, for each pixel, the average RGB value of surrounding pixels and to replace the value of the pixel. The blurring algorithm uses a grid, the size of which is determined by a depth value passed in to the program (presently either 3, 5, or 7). The pixel at the center of the grid is replaced with the average RGB value of surrounding pixels in the grid. To do so, the algorithm begins by checking to see if the corners of the grid are present in the target zone of the current pixel. For a pixel at the i-th column and j-th row, it looks at the pixels at the corners of the grid determined by the depth of blurring. If all four corners exist around the current pixel, it computes the average of the entire grid. If the upper left corner is missing, it computes only the average of the pixels to the right and below the current pixel and vice versa for all other corners. If two corners are missing, the pixel is at the edge of the image but not at a corner. Therefore only the pixels to one side of the image are used for the averaging. Using this technique, the blurring algorithm is dynamic and is not dependent on the size of the image. Figures 1 and 2 below show the result of blurring on an original image snippet.

|

|

|

Figure 1: Original Image |

Figure 2: Blurred Image (3x3 blurring) |

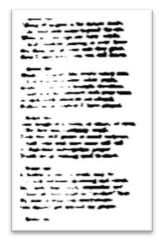

After blurring, the image is converted to a binary image. We perform bi-Gaussian binarization to convert the image to a new image that includes only object and background intensity pixels. This bi-Gaussian binarization process is based on Haverkamp, Soh, and Tsatsoulis, whose method automatically finds the threshold in remote sensing images to convert them into binary images.21 Briefly, we first compute the average intensity of the image. Given this average intensity value, we further compute the mean and standard deviation of the intensity levels of pixels whose values fall below the average intensity value, and assign these two values to the first Gaussian curve's initial parameters. Likewise, we compute the mean and standard deviation of the intensity levels of pixels whose values fall above the average intensity value and find the second Gaussian curve's initial parameters. We then use the curve-fitting algorithm (Young and Coraluppi, 1970) to find a mixture of the two Gaussian curves.22 We also use a maximum likelihood method (Kashyap and Blaydon, 1968) to identify the best-fitting mixture, and this mixture represents the bi-Gaussian mixture describing the intensity values in our image snippet.23 Then, we find the weighted center of the mixture and use the center as the binarization threshold.24 Figures 3 and 4 show the result of binarizing a blurred image snippet.

|

|

|

Figure 3: Blurred Snippet (3x3 blurring) |

Figure 4: Binary Snippet |

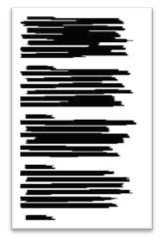

Each snippet then undergoes pixel consolidation, a much more aggressive approach to blurring that has proven effective for evaluating and analyzing certain features in our training process. Unlike standard blurring, the pixel consolidation algorithm operates on the binarized version of a snippet. The image is cleaned, and all stray black spots are cleared. For this step, the number of white pixels in each column of the image are counted. If the percentage of white pixels in a column is greater than a predetermined threshold, the entire column is assigned to background (white) pixels. Then, the algorithm goes through each pixel in a row and counts the total object pixels (black) in that row and saves the indices of the first and last object pixels in the row. If the total number of object pixels in a row is greater than a given threshold, all of the pixels from the start index to the end index in the row are assigned to object pixels. Figures 5 and 6 show the result of pixel consolidation. The consolidated image better highlights the shape of the poem contained in the snippet, allowing the next step—feature extraction—to compute values for a set of features describing the image.

|

|

|

Figure 5: Binary Snippet |

Figure 6: Consolidated Snippet |

With the pre-processing complete, and prior to feature extraction, each snippet is processed by two set-up algorithms. The first counts both the length of background pixels prior to the first object pixel and the length of background pixels after the final object pixel in a row. The second counts the continuous background pixels in a each column and stores the values in a 2D integer matrix. The feature computation methods use these values to calculate attributes of the three features:

Algorithm: Feature Extraction

Input: a processed image, isolid, that is ready for feature extraction

Output: a computed set of attributes saved to the data structure representing isolid in attribute list

1. Perform computeColumnWidths on isolid and store values in leftColumnWidths and rightColumnWidths

2. Perform computeRow Depths on isolid and store values in rowDepths

3. Perform computeMarginStats on isolid and store them in attributesList

4. Perform computeJaggedStats on isolid and store them in attributesList

5. Perform computeStanzasStats on isolid and store them in attributesList

6. Perform computeLengthStats on isolid and store them in attributesList

End Algorithm

The feature computation methods compute values for three features: left margin whitespace; whitespace between stanzas; and content blocks with jagged right-side edges, which are the result of varying line lengths in poetic content and are in contrast to justified blocks of much newspaper content. While future-stage, comparative work might have the computer discern the most salient features of the true and false snippets to be used for comparison, we began by discerning those features that appear to us most useful as humans in distinguishing the presence of poetry in the images. Since one of our team members had significant research experience with poems published in newspapers and had elsewhere cataloged approximately 3,000 such poems by hand while reviewing print originals, microfilm copies, and digital images of originals and microfilm copies, we began with the features that seemed the most relevant in that work.25 Reassuringly, we identified some overlapping features with the Visual Page project (margins and stanzas), which might further suggest the relevance of these features. At present, an analysis of jaggedness is unique to our project, likely in part because jaggedness emerges as a feature in comparison to the justified prose text.

As the first stage of feature extraction, we compute margin statistics, which calculates the mean, standard deviation, and maximum and minimum of the measure of the margin on the left of the image. We include this feature in our algorithm because poetic content typically was typeset with wider left and right margins than non-poetic text. At present, our design focuses on left margins only, since we evaluate qualities of the right side of the poem in relation to jaggedness. The jaggedness algorithm computes the mean, standard deviation, and maximum and minimum measures of the background pixels after the final object pixel in each row. We base our measure of "jaggedness" statistics on the column widths on the right of each image.

Finally, we extract feature attributes that determine the presence of stanzas by looking for whitespace between stanzas. The stanza algorithm computes the mean, standard deviation, and maximum and minimum of the measure of white space between blocks of text. It computes the length of background pixels between paragraphs or stanzas and calculates these attributes. We base our stanza statistics on the measure of the occurrence of spacing between blocks of text.

Once all of the feature attribute data are extracted, the data are compiled into a dataset compatible for use with the WEKA Artificial Neural Network. Our program uses the WEKA system library, developed by the machine learning group at the University of Waikato. The WEKA Adapter uses an artificial neural network to classify the image snippet.

The artificial neural network (or ANN) uses back propagation between two layers of nodes to analyze the attributes and output a true or false flag to indicate the existence of poetic content in the snippet. Attributes are translated into individual nodes that communicate with a hidden layer. These connections are initially weighted, and after a predetermined number of iterations the weights are increased or decreased depending on the prediction each node makes. If it makes the right classification, the connections from the node are increased in weight; if it makes the wrong classification, the connections are decreased in weight. Over the iterations, the ANN is optimized such that the attributes that contribute most to determining the instance have the most weight, and through back propagation, the ANN reduces the weight of the less deterministic attributes.

To develop an ANN that has the highest possible accuracy, we use n-fold (where n = 10) cross-validation: the set of image snippets is divided into n subsets and for every fold, n -1 subsets are used as the training set and one is used as the testing set. This network is later used to classify the dataset and determine if the instance is a poem or not.

5 Result

Overall, the average accuracies achieved from our 10-fold cross validation of training our ANN are very encouraging. In the training stage, 79.44% of image snippets that feature poetic content were correctly identified as poem image snippets; 91.75% of image snippets that do not feature poetic content were correctly identified as non-poem image snippets (Table 1).

| Predicted True | Predicted False | |

| Is True | 79.44% | 20.56% |

| Is False | 8.26% | 91.75% |

Table 1. Training results, when using the 5x5 averaging algorithm for blurring

followed by the pixel consolidation algorithm.

| Predicted True | Predicted False | |

| Is True | 61.84% | 36.18% |

| Is False | 20.70% | 79.30% |

Table 2. Testing results, when using the 5x5 averaging algorithm for blurring

followed by the pixel consolidation algorithm.

The precision of the classifier in the training stage was 90.58%, and recall was 79.4%. Precision is the quotient of the total number of true positive classifications (poem snippets classified as poem snippets) divided by the total of all snippets classified as true (whether they are true positives or false positives). It measures how many times we are correct whenever we predict that an image contains poetic content. Recall is the value of the total number of true positive classifications divided by the total number of poem snippets with poetic content, whether the classifier identified them as true (true positives) or false (false negatives). Recall measures how many times we correctly identify poem snippets out of all poem images. In the testing stage, both precision and recall dropped: precision was 74.92% and recall was 61.84% (see Table 2 above for complete statistics).

While these testing results are not ideal, they are not as discouraging as they might at first appear. The drop in the classification accuracies from the training set to the testing set indicates an over-fitting of the classifier. In other words, we over-trained the classifier to fit the image snippets in the training set, so that when the classifier was subsequently presented with previously unseen image snippets, it did not adapt very well. One possible cause of the over-fitting is that the training dataset may not have been sufficiently representative of the set of all possible image snippets. Chronicling America, after all, features newspapers from 1836—1923, and over these years, the format and appearance of newspapers shifted in both subtle and dramatic ways. Also possible is that the visual cues we currently use for analysis do not adequately capture the variety and diversity of the snippets. With this understanding, we plan to broaden the training set even further as well as explore additional visual cues. In addition, we are examining snippet qualities that caused the classifier to fail.

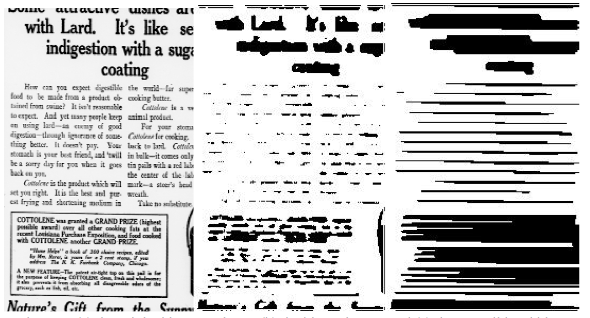

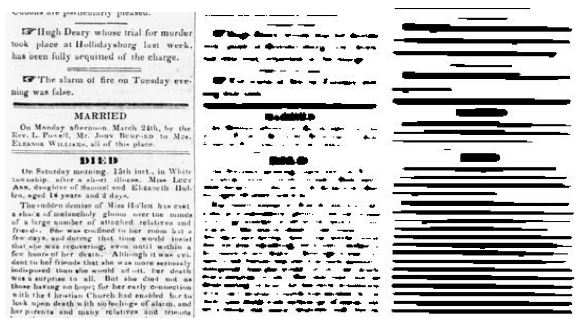

Several features pose challenges for the classifier, leading to false negatives and false positives. The following figures illustrate some of the issues that can contribute to false positives and false negatives. In Figure 7, the original image snippet includes multiple columns of text within a single column of the newspaper. The snippet also includes a bordered textbox alongside the left-most outline of a graphic. The multi-line, centered title in combination with these other features contributes to a sufficient amount of jaggedness as calculated by the classifier. In addition, there is comparatively generous white space between text lines and text blocks, which may appear to mimic stanzas, based on our current methodology. These measures help lead to a false positive.

Figure 7: (a) the original image snippet, (b) the binary image, and (c) the consolidated binary image.

Our classifier mistakenly identified it as a poem image.

In Figure 8, the multiple, short announcements and inconsistencies in pixel intensity across the rows cause the image processing algorithm to pick up multiple lines of object pixels with varying lengths, leading to noticeable jaggedness. White space between text lines and text blocks may be a factor here as well. As a result, the classifier also identified this snippet as a poem image—another false positive.

Figure 8: (a) the original image snippet, (b) the binary image, and (c) the consolidated binary image.

Our classifier mistakenly identified it as a poem image.

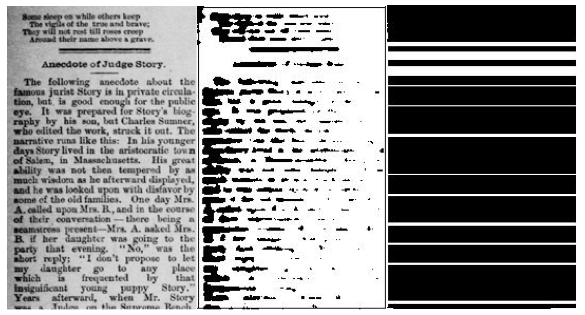

While too high a percentage of false positives will cause problems at scale—if too many false positives come through, we are back to needing to evaluate snippets by hand/eye—the larger problem is false negatives. Several factors thus far have contributed to snippets that contain poems being classified as false. These include original image quality, text bleed-through, and the ratio of poetic content to non-poetic content. In Figure 9, for example, the original image was of low quality: contrast was low and range effect was present (i.e., the left side of the image was darker than the right side). As a result, our image processing algorithm was stressed and generated a fully consolidated, binary image. In addition to the problem caused by the range effect, the poetic content occupied only the top 1/5 of the image. The combination of these factors caused the classifier to fail in classifying this snippet as a poem snippet.

Figure 9: (a) the original image snippet, (b) the binary image, and (c) the consolidated binary image.

Our classifier mistakenly identified it as a non-poem image.

Figure 10 documents an image snippet that suffers greatly from "bleed-through," where text from the reverse side of the newspaper page is visible. As a result, our dynamic thresholding algorithm was not able to identify a viable threshold to separate the image into object and background pixels, as the entire image was seen as a background image. Thus, no meaningful feature values were computed. Though to human inspection this image clearly depicts a poem, our system failed to identify it as such.

Figure 10: The original image snippet with significant bleed-through.

Our algorithm failed to identify a viable threshold to classify the image into object and background pixels.

Our classifier mistakenly identified the snippet as a non-poem image.

Overall, most of the false positives and false negatives are due to the failure of our image processing module to adequately capture the visual cues in the image snippets. On the other hand, our classifier module, once given proper visual cues, is quite good at identifying them correctly. The failures in capturing visual cues were caused by poor image conditions (e.g., low contrast, bleed-through) and distracting cues (e.g., additional columns, textboxes, additional lines/diagrams). Specific types of newspaper content also appear likely to pose challenges, including dialogue and some advertisements. Addressing these issues to improve overall classification accuracy is an important next step. Despite the issues with feature extraction, the high precision and recall during the training stage of developing the classifier (where precision and recall reached 90.58% and 79.4%., respectively) are encouraging and demonstrate that the approach is viable once we address the challenges of feature extraction.

6 Ongoing Efforts and Next Steps

In addition to re-evaluating our feature set and exploring additional visual cues, an immediate next step is to broaden the training set to include more, and more diverse, image snippets to address the problem of over-fitting the classifier. To create a larger training set, we have recently completed work on a process for creating image snippets automatically. Thus far, we have prepared the image snippets by hand, but doing so going forward would all but defeat the purpose of a computational approach to the problem. The snippet generator will allow us to create a significantly larger training set as well as to generate the millions of snippets required for full, wide-scale deployment.

Article-level segmentation of newspaper pages remains an ongoing area of high-level research, and poses a significant challenge in its own right.26 For the present project, we determined that segmenting the newspaper pages into individual article-level items was more than we needed to do. We are beginning with the premise that segmenting the images into columns and then processing overlapping snippets will serve the needs of the current project. The immediate goal is to identify pages that contain poetic content, and ultimately we should be able to relate true image snippets to specific zones within the image, without the work of full page segmentation. This approach allows us to move on with the work of processing the pages for poetic content, while others address the page segmentation problems.

As with the feature extraction process, we begin image segmentation with some pre-processing steps. First, for a given image we find an average intensity of the input image pixels by adding up the numerical pixel values and then dividing by the total number of pixels. If the average shows that the image is too bright (i.e., it is above a predetermined threshold), then we enhance the contrast of the image. Once the page image either passes the pixel intensity test or undergoes contrast enhancement, it is binarized using a bi-Gaussian technique. The image then undergoes morphological cleaning to remove noise, where outlying object pixels in areas of background intensity are removed. We then look for columns that have mostly background pixels, which indicate that there may be a column break. The average width of object pixels between columns of predominantly background pixels is used to make the column breaks in the page. Finally, the algorithm separates the original image into many new images, by using the column breaks to determine image width and a pre-determined number of rows for the image height.

Algorithm: Page Segmentation

Input: an original image, Ioriginal, of a newspaper page

Output: a set of image snippets, 〈ioriginal〉

1. Compute average intensity of Ioriginal, AveIntensity(Ioriginal)

2. If AveIntensity(Ioriginal) is too bright then

a. Perform contrast enhancement on Ioriginal to obtain Ienhanced

3. Perform Bi-Gaussian binarization on Ienhanced to obtain Ibinary

4. Perform morphological cleaning on Ibinary to obtain Ibinary_cleaned to clean up image noise

5. ColumnBreaks ← FindColumnBreaks(Ibinary_cleaned)

6. 〈 ioriginal〉 ← GenerateSnippets(ColumnBreaks,Ioriginal)

End Algorithm

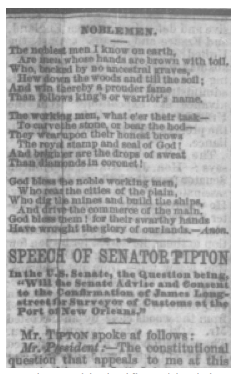

Figure 11 shows the results of finding the columns; the vertical red lines signal the detected column breaks. These columns will then be used to segment the image into image snippets.

Figure 11: Red lines for the column breaks

Thus far, the method has proven quite reliable, although there are identifiable sources of trouble: skewed text; faded text; bleed-through text; damage to original issues including rips, tears, and material degradation; skewed images; and microfilm exposure issues.27

Ultimately, this page segmentation process will allow us to tie true classifications back to page and issue-level metadata for each snippet, so that we might build toward a bibliography of poetry in the newspapers represented in Chronicling America. A next stage would be connecting the true snippets with underlying coordinate information captured in Chronicling America's XML data structure for each page, thus relating image snippets to particular zones of text.

In the coming months, we will begin the process of automatically creating snippets and classifying snippets from newspapers from the period 1836—1840. We will begin with this period both because it comprises the first five years of papers available digitally from Chronicling America and because the time period coincides with the emergence of the penny press in newspaper history. Poems were a popular feature of newspapers during this period, so we anticipate this subset from Chronicling America to feature a significant amount of poetic content and be a useful case study for further analyzing and refining our classifier.

At the same time as we proceed with testing a broader deployment of the ANN-based classifier and addressing our problem of over-fitting the classifier, we will proceed on a secondary front as well. We are beginning work to test a decision tree for the classifier—potentially in place of or in a process complementary to the ANN approach. A decision tree would allow us to interpret the classification decision making process clearly, in a way that the black box of the ANN does not.

7 Conclusion

Information professionals, digital humanists, and other researchers face a key challenge: locating materials of relevance—and certainly materials of relevance at scale—within digital collections can already be quite difficult, and it will become increasingly so as more content is digitized. Traditional methods of access and discovery will continue to meet some use cases, but in order to more fully benefit from digitization and the advent of digital resources, we must consider new and alternative approaches that open more paths for research. Therefore, while the Aida project team has begun with a single case study to explore image analysis as a strategy for discovery, a larger goal of the project is to advance image processing and image analysis as a methodology within the digital humanities and digital libraries communities. Although text-based approaches are powerful in their own right, the present domination of text-only approaches already restricts the types of questions research can pursue in digitized collections. Over time, such restriction of the questions that can be researched in the collections has the potential to narrow even the types of questions we imagine. Therefore, information professionals, digital humanists and researchers should be mindful of, and proactively seek, diversity of both materials as well as methodologies for building, using, and analyzing digital collections.

Notes

The Image Analysis for Archival Discovery project is supported by a National Endowment for the Humanities Digital Humanities Start-up Grant. Any views, findings, conclusions, or recommendations expressed in this article do not necessarily represent those of the National Endowment for the Humanities.

1 This article draws on language from Elizabeth Lorang and Leen-Kiat Soh, "Image Analysis for Archival Discovery (Aida)," (grant application to the National Endowment for the Humanities, Digital Humanities Start-up Grant Competition, September 12, 2013), as well as on two conference presentations: Elizabeth Lorang, Leen-Kiat Soh, Grace Thomas, and Joseph Lunde, "Detecting Poetic Content in Historic Newspapers with Image Analysis" (presentation, Digital Humanities 2014, Alliance of Digital Humanities Organizations Conference, Lausanne, Switzerland, July 7—12, 2014); Elizabeth Lorang and Leen-Kiat Soh, "Leveraging Visual Information for Discovery and Analysis of Digital Collections" (presentation, Digital Library Federation Forum, Atlanta, GA, October 27—29, 2014).

2 Nikolaos Arvanitopoulos Darginis and Sabine Suüsstrunk, "Binarization-free Text Line Extraction for Historical Manuscripts" (paper); David Robey, Charles Crowther, Julianne Nyhan, Segolene Tarte, and Jacob Dahl, "New and Recent Developments in Image Analysis: Theory and Practice" (panel); John Resig and Doug Reside, "Using Computer Vision to Improve Image Metadata" (presentation); Eleanor Chamberlain Anthony, "From the Archimedes' Palimpsest to the Vercelli Book: Dual Correlation Pattern Recognition and Probabilistic Network Approaches to Paleography in Damaged Manuscripts" (presentation); Matthew J. Christy, Loretta Auvil, Ricardo Gutierrez-Osuna, Boris Capitanu, Anshul Gupta, and Elizabeth Grumbach, "Diagnosing Page Image Problems with Post-OCR Triage for eMOP" (presentation); Carl Stahmer, "Arch-V: A Platform for Image-Based Search and Retrieval of Digital Archives" (presentation). All presented at Digital Humanities 2014, Alliance of Digital Humanities Organizations Conference, Lausanne, Switzerland, July 7—12, 2014.

Submissions to the annual Digital Humanities conference tagged by their authors as dealing with image processing similarly suggest an uptick in digital humanities research dealing with images. In 2013, 2.87% of submitting authors identified their work as dealing with image processing. In 2014, this percentage increased to 4.58% (submissions tagged as such for the 2015 conference went down slightly to 4.19%). See Scott Weingart, "Analyzing Submissions to Digital Humanities 2013," The Scottbot Irregular (blog), November 8, 2012,; "Submissions to Digital Humanities 2014," The Scottbot Irregular (blog), November 5, 2013; and "Submissions to Digital Humanities 2015 (pt. 1)," The Scottbot Irregular (blog), November 6, 2014.

Presentations focusing primarily on digital images may have been tagged with other keywords by their authors and therefore did not get gathered under the image processing umbrella. Similarly, in terms of the overall whole, image analysis remains a small piece of the conference, well behind text analysis at 20.95%, 21.56%, and 16.62% of submissions for the 2015, 2014, and 2013 conferences respectively. While other factors certainly may be responsible for the increased percentage of papers dealing with image processing, one possible explanation is that digital images and image analysis are emerging as more significant research areas within the digital humanities and digital libraries communities.

3 Layout analysis and image segmentation is not a new domain—it has been used in the past to identify such features as indexes in books and footnotes. These materials are far more regular and differently formatted enough from the rest of the text, when compared to other historic materials, that they do not pose as significant a problem for analysis and identification.

4 Chronicling America. As of March 2015, Chronicling America included more than 9.24 million images of newspaper pages, and this number grows monthly. When the Aida team prepared its grant application for the national Endowment for the Humanities in 2013, the number of images was near 7 million. Ultimately, we plan to process the totality of Chronicling America, but we will begin with what is now a subset of the total, at least 7 million pages images.

5 Ted Underwood, "Understanding Genre in a Collection of a Million Volumes, Interim Report" (report, December 29, 2014). Figshare. http://doi.org/10.6084/m9.figshare.1281251

6 Jody L. DeRidder and Kathryn G. Matheny, "What Do Researchers Need? Feedback on use of Online Primary Source Materials," D-Lib Magazine 20, 7/8 (2014). http://doi.org/10.1045/july2014-deridder

7 Some examples include the Software Studies Initiative's ImagePlot software; Flickr's Park or Bird project; Facebook's, and others', facial recognition systems for digital photographs; and Google's Image Search. Google also is working on image recognition software to describe entire scenes in digital photographs. See John Markoff, "Researchers Announce Advance in Image-Recognition Software," New York Times, November 17, 2014.

8 Kalev H. Leetaru and Robert Miller, "Unlocking the Imagery of 500 Years of Books," The Signal (blog), December 22, 2014.

9 Andrew Hobbs and Claire Januszewski, "How Local Newspapers Came to Dominate Victorian Poetry Publishing," Victorian Poetry 52, 1 (2014), 65—87. http://doi.org/10.1353/vp.2014.0008

10 As of March 2015, Chronicling America included digitized newspapers in seven languages: English, French, German, Hawaiian, Italian, Japanese, and Spanish.

11 Viral Texts project; David A. Smith, et al., "Detecting and Modeling Local Text Reuse," Proceedings of the 2014 IEEE/ACM Joint Conference on Digital Libraries (2014), 183—192, http://doi.org/10.1109/JCDL.2014.6970166; Shaobin Xu, David A. Smith, Abigail Mullen, and Ryan Cordell, "Detecting and Evaluating Local Text Reuse in Social Networks," Proceedings of the Joint Workshop on Social Dynamics and Personal Attributes in Social Media (2014), 50—57; and David A. Smith, Ryan Cordell, and Elizabeth Maddock Dillon, "Infectious Texts: Modeling Text Reuse in Nineteenth-Century Newspapers," 2013 IEEE International Conference on Big Data (2013), 86—94, http://doi.org/10.1109/BigData.2013.6691675.

12 Robert K. Nelson, "Mining the Dispatch."

13 Carolyn Strange, Daniel McNamara, Josh Wodak, and Ian Wood, "Mining for the Meanings of a Murder: The Impact of OCR Quality on the Use of Digitized Historical Newspapers," Digital Humanities Quarterly 8, 1 (2014).

14 Underwood's research to categorize pages of non-periodical publications in HathiTrust according to genre, based on linguistic features, underscores the need for novel approaches to connecting researchers with materials of interest and relevance within digital collections. See Underwood, "Understanding Genre."

15 See, as some examples, T. Hassan and R. Baumgartner, "Table Recognition and Understanding from PDF Files," Ninth International Conference on Document Analysis and Recognition 2 (2007), 1143-1147, http://doi.org/10.1109/ICDAR.2007.4377094; Ying Liu, Prasenjit Mitra, and C. Lee Giles, "Identifying Table Boundaries in Digital Documents via Sparse Line Detection," Proceedings of the 17th ACM Conference on Information and Knowledge Management (2008), 1311-1320, http://doi.org/10.1145/1458082.1458255; Jing Fang, et al., "A Table Detection Method for Multipage PDF Documents via Visual Separators and Tabular Structures," 2011 International Conference on Document Analysis and Recognition (2011), 779-783, http://doi.org/10.1109/ICDAR.2011.304; Emilia Apostolova, et al., "Image Retrieval from Scientific Publication: Text and Image Content Processing to Separate Multipanel Figures," Journal of the American Society for Information Science and Technology 64, 5 (2013), 893-908; Stefan Klampfl and Roman Kern, "An Unsupervised Machine Learning Approach to Body Text and Table of Contents Extraction from Digital Scientific Articles," in Research and Advanced Technology for Digital Libraries, ed. Trond Aalberg, et al. (Berlin: Springer, 2013), 144-155, http://doi.org/10.1007/978-3-642-40501-3_15.

16 See, for example, Reinhold Huber-Mörk and Alexander Schindler, "An Image Based Approach for Content Analysis in Document Collections," in Advances in Visual Computing, ed. George Bebis, et al. (Berlin: Springer, 2013), 278—287, http://doi.org/10.1007/978-3-642-41939-3_27. Image use in other domains, such as in natural sciences, has been more extensive. That extensive literature is not covered here, other than to the extent to which relevant approaches and scholarship provide a foundation for the work pursued here.

17 Iuliu Konya and Stefan Eickeler, "Logical Structure Recognition for Heterogeneous Periodical Collections," in Proceedings of the First International Conference on Digital Access to Textual Cultural Heritage (New York: ACM, 2014), 185.

18 Hongxing Gao, et al., "An Interactive Appearance-based Document Retrieval System for Historic Newspapers," Proceedings of the Eighth International Conference on Computer Vision Theory and Applications, VISAPP13 2 (2013), 84—87.

19 Neal Audenaert and Natalie M. Houston, "VisualPage: Towards Large Scale Analysis of Nineteenth-Century Print Culture," 2013 IEEE International Conference on Big Data (2013), 9—16, http://doi.org/10.1109/BigData.2013.6691665; Natalie Houston, white paper report on The Visual Page, March 31, 2014. Audenaert and Richard Furuta earlier demonstrated the need to "[support] research tasks where access to high-quality transcriptions is either infeasible or unhelpful." See Audenaert and Furuta, "What Humanists Want: How Scholars Use Source Materials," Proceedings of the 10th Annual Joint Conference on Digital Libraries (2010), 283—292, http://doi.org/10.1145/1816123.1816166.

20 HathiTrust Digital Library, "HathiTrust Research Center Awards Four Prototyping Project Grants," May 7, 2014.

21 D. Haverkamp, L.-K. Soh, and C. Tsatsoulis, "A Comprehensive, Automated Approach to Determining Sea Ice Thickness from SAR Data," IEEE Transactions on Geoscience and Remote Sensing 33, 1 (January 1995), 46—57.

22 T. Y. Young and G. Coraluppi, "Stochastic Estimation of a Mixture of Normal Density Functions Using an Information Criterion," IEEE Transactions on Information Theory IT-16, 3 (May 1970), 258—263.

23 R. L. Kashyap and Colin C. Blaydon, "Estimation of Probability Density and Distribution Functions," IEEE Transactions on Information Theory IT-14, 4 (July 1968), 549—556.

24 For details, refer to Haverkamp, Soh, and Tsatsoulis.

25 Elizabeth M. Lorang, "American Newspaper Poetry from the Rise of the Penny Press to the New Journalism" (PhD diss., University of Nebraska—Lincoln, 2010).

26 See, for example, David Hebert, et al. "Automatic Article Extraction in Old Newspapers Digitized Collections," in Proceedings of the First International Conference on Digital Access to Textual Cultural Heritage (New York: ACM, 2014), http://doi.org/10.1145/2595188.2595195

27 These issues are similar to those identified by Hebert, et al.

About the Authors

|

Elizabeth Lorang is Research Assistant Professor and Digital Humanities Projects Librarian in the Center for Digital Research in the Humanities at the University of Nebraska—Lincoln. She is a co-principal investigator of the NEH-funded project Image Analysis for Archival Discovery (aida.unl.edu), program manager and senior associate editor of the Walt Whitman Archive, and co-director of Civil War Washington. With R. J. Weir, she edited the electronic scholarly edition, "'Will not these days be by thy poets sung': Poems of the Anglo-African and National Anti-Slavery Standard, 1863—1864". Her research interests include digital humanities and digital scholarship, academic libraries, American poetry, and nineteenth-century American newspapers. |

|

Leen-Kiat Soh received his B.S. with highest distinction, and M.S., and Ph.D. with honors in electrical engineering from the University of Kansas, Lawrence. He is now an Associate Professor at the Department of Computer Science and Engineering at the University of Nebraska, Lincoln. His primary research interests are in multiagent systems and intelligent agents, especially in coalition formation and multiagent learning. He has applied his research to computer-aided education, intelligent decision support, distributed GIS, survey informatics, and image analysis. He has also conducted research in computer science education. Dr. Soh is a member of IEEE, ACM, and AAAI. He is co-principal investigator of Image Analysis for Archival Discovery. |

|

Maanas Varma Datla is an undergraduate Computer Science and Engineering major at the University of Nebraska—Lincoln (UNL) with interests in artificial intelligence and machine learning. His work on Image Analysis for Archival Discovery has been supported in part by the Undergraduate Creative Activities and Research Experience program at UNL. |

|

Spencer Kulwicki is an undergraduate Computer Science and Engineering major at the University of Nebraska—Lincoln. |

|

|

|

| P R I N T E R - F R I E N D L Y F O R M A T | Return to Article |