|

|

|

| P R I N T E R - F R I E N D L Y F O R M A T | Return to Article |

D-Lib Magazine

March/April 2015

Volume 21, Number 3/4

Trustworthiness: Self-assessment of an Institutional Repository against ISO 16363-2012

Bernadette Houghton

Deakin University, Geelong, Australia

bernadette.houghton@deakin.edu.au

DOI: 10.1045/march2015-houghton

Abstract

Today, almost every document we create and the output from almost every research-related project, is a digital object. Not everything has to be kept forever, but materials with scholarly or historical value should be retained for future generations. Preserving digital objects is more challenging than preserving items on paper. Hardware becomes obsolete, new software replaces old, storage media degrades. In recent years, there has been significant progress made to develop tools and standards to preserve digital media, particularly in the context of institutional repositories. The most widely accepted standard thus far is the Trustworthy Repositories Audit and Certification: Criteria and Checklist (TRAC), which evolved into ISO 16363-2012. Deakin University Library undertook a self-assessment against the ISO 16363 criteria. This experience culminated in the current report, which provides an appraisal of ISO 16363, the assessment process, and advice for others considering embarking on a similar venture.

Introduction

Digital preservation is a relatively young field, but significant progress has already been made towards developing tools and standards to better support preservation efforts. In particular, there has been growing interest in the audit and certification of digital repositories. Given the growing reliance on institutional repositories in the past decade (OpenDOAR, 2014), the need for researchers to be able to trust that their research output is safe is becoming increasingly important. This need was formally recognised by Deakin University Library in 2013, with the establishment of a project to determine the compliance of its research repository, Deakin Research Online (DRO), with digital preservation best practices.

DRO was established in 2007 against the background of the Australian Government's Research Quality Framework (Department of Education, Science and Training, 2007). Its primary objective was to facilitate the deposit of research publications for reporting and archival purposes. The Fez/Fedora software underlying DRO was chosen, in large part, because of its preservation-related functionality, such as versioning, and JHOVE and PREMIS support. Over the years, new functionality has been added to DRO, and workflows changed as deposit and reporting requirements changed. Several ad-hoc mini projects have been undertaken to address specific preservation-related aspects of DRO, but up to 2013 there had been no assessment of the new functionality or workflows against digital preservation best practices.

A review of the digital preservation literature indicated that external accreditation of the trustworthiness of digital repositories by bodies such as the Center for Research Libraries (CRL) had started to gain some traction among larger cross-institutional repositories (CRL, 2014). Due to the cost, external accreditation was not seen as a viable option for Deakin (a medium-sized university library) at that stage; however, a self-assessment against the standards used for accreditation was considered feasible.

The literature review indicated that the Trustworthy Repositories Audit and Certification (TRAC) checklist (CRL, OCLC, 2007) was the most widely accepted criteria for assessing the trustworthiness of digital repositories. TRAC, originally developed by the Research Libraries Group (RLG) and the National Archives and Records Administration, evolved into the Audit and Certification of Trustworthy Digital Repositories: Recommended practice CCSDS 652.0-M-1 (Consultative Committee for Space Data Systems (CCSDS), 2011), which was formalised as ISO 16363 in 2012 (International Organization for Standardization, 2012). ISO 16363 was chosen as the basis of Deakin's self-assessment of DRO.

Preliminary research for the project began in May 2013, with one staff member (the author) allocated to the project on a part-time basis. Initially, the expected completion date was August 2013. However, due to other work priorities, the actual assessment did not begin until July 2013. The final report was drafted in December 2013.

Laying the Groundwork

Once the go-ahead was given for the project, research was undertaken to learn from others' experience. Of particular interest were the audits undertaken by CRL of HathiTrust, Portico and Scholar's Portal (CRL, 2014). It was noted that each of the repositories reviewed had committed to some degree of self-assessment in addition to the external accreditation audit by CRL. The CRL website includes checklists and templates which can be used as the basis for a self-audit (CRL, 2014). However, these were considered too out-of-date to use, being based on the 2007 TRAC criteria rather than the more recent ISO 16363.

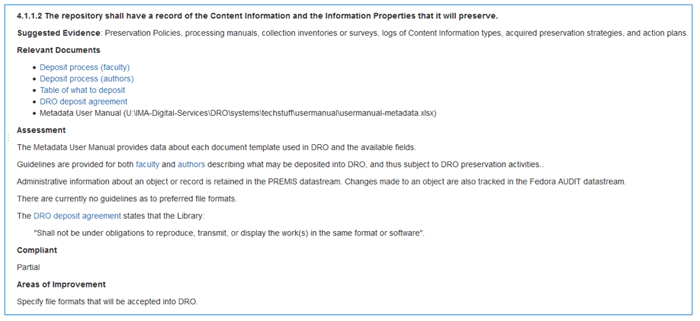

A wiki was chosen as the mechanism for documenting the self-assessment. The CRL website includes an Excel template as the documentation mechanism; however, the choice of a wiki instead turned out to be a rather wise decision. Some of the ISO 16363 criteria required lengthy responses which don't fit too well into spreadsheets. The wiki was set up to include the following fields for each criteria: "Suggested evidence", "Relevant documents", "Assessment", "Compliant" and "Areas of Improvement". The setup stage was a good opportunity to become familiar with the criteria and the documentation required for the next step of the assessment.

Figure 1: Example wiki documentation of the self-assessment.

View larger version of Figure 1.

Once the wiki framework was complete, a preliminary document hunt was undertaken to identify documentation that would provide evidence of the extent to which DRO was meeting each criteria. Relevant policies and procedures, at both Library and University level, were sought out and linked to in the wiki. This required a good knowledge of the University's and Library's organisational responsibilities, as well as familiarity with the University's governance web pages. ISO 16363 provides 'suggested evidence' examples for each of its criteria. However, it soon became obvious that many of the suggested examples were not relevant for DRO. From then on, documents were gathered based on the author's local knowledge. The preliminary collection of documentation did not prevent the need to search for additional documents during the course of the assessment, but it did save much time.

Once the preliminary documents had been gathered, each criteria was reviewed against the relevant documentation. Additional documentation was chased up as necessary, and workflows and procedures clarified with relevant staff. An assessment was then made of DRO's compliance with the criteria, and areas of improvement identified. All findings were progressively documented in the wiki, with each criteria allocated a rating of "full compliance", "part compliance" or "not compliant".

ISO 16363 Assessment

ISO 16363 contains 105 criteria covering 3 areas. The criteria are generally very comprehensive, although some have inadequate or missing explanations, leaving them open to interpretation. There is some overlap between individual criteria, and not all criteria will be applicable to all repositories. The areas are:

- Organizational infrastructure, including governance, organisational structure and viability, staffing, accountability, policies, financial sustainability and legal issues;

- Digital object management, covering acquisition and ingest of content, preservation planning and procedures, information management and access;

- Infrastructure and security risk management, covering technical infrastructure and security issues.

ISO 16363's example documentation for each criteria should be viewed as a general guideline only. Each repository's mileage will vary here; documentation that is relevant for one repository will not be relevant for another. The collection of documentation was probably the most time-consuming part of Deakin's self-assessment, as documents were scattered across multiple areas of the University.

Ambiguous terminology used by ISO 16363 caused some confusion. For example, ISO 16363 uses "linking/resolution services" to refer to persistent identifiers or links to materials from the repository. However, in Deakin's experience, this terminology is more commonly used to refer to link resolution services such as WebBridge and SFX.

ISO 16363 is based on the Open Archival Information System (OAIS) model (CCSDS, 2012). It needs to be kept in mind that OAIS is a conceptual model that won't necessarily appear to align with actual or desired workflows. For example, OAIS treats submission, dissemination and archival packages as separate entities. This doesn't mean that OAIS-compliance requires separate submission, dissemination and archival packages. The theoretical nature of OAIS is reflected in ISO 16363.

Undertaking a self-assessment against ISO 16363 is not a trivial task, and is likely to be beyond the ability of smaller repositories to manage. Any organisation that undertakes a self-assessment against ISO 16363 should tailor it to best fit their own circumstances and budget. If ISO 16363 still appears too mammoth an undertaking, alternative tools exist, such as NDSA's Levels of Digital Preservation (Owens, 2012).

It should be stressed that a self-assessment is not an audit. In an independent audit, the auditor would be at arms' length from the repository, evidence of compliance with policies and procedures would be required, and testing undertaken to confirm that the repository's preservation-related functionality was indeed operating as expected. Deakin's self-assessment was undertaken by the author, who works closely with DRO, and was basically restricted to a review of relevant documentation and practices. For a first self-assessment, this was considered to be an appropriate strategy. However, future self-assessments should undertake more rigorous testing of DRO's underlying functionality.

Findings

The assessment indicated that DRO meets most of the criteria for being considered a Trusted Digital Repository, but, as expected, there is room for improvement. More specifically, DRO fully meets 67 of the 105 criteria, partially meets 32, and does not meet 15 criteria; with one criteria not applicable. Table 1 summarises these results across the three ISO 16363 areas.

Table 1: ISO 16363 compliance summary

| ISO 16363 Section | Full Compliance | Part Compliance | Not Compliant |

| 3. Organizational infrastructure | 13 (54%) | 8 (33%) | 3 (13%) |

| 4. Digital object management | 36 (62%) | 20 (34%) | 2 (3%) |

| 5. Infrastructure and security risk management | 18 (82%) | 4 (18%) | — |

It should be pointed out here that not all criteria should be considered to have equal weight. While ISO 16363 does not allocate a rating to each criteria, some criteria are obviously more important in terms of a repository's trustworthiness than others. A statement that a "repository meets 60% of the ISO 16363" criteria can be very misleading if one of the criteria the repository does not meet is fundamental to a repository's trustworthiness; for instance, absence of integrity measurements (ISO 16363 criteria 3.3.5).

The self-assessment indicated that DRO's approach to preservation tends to be ad-hoc, which was already known. To a large extent, this is due to the still-evolving nature of the digital preservation field, and most repositories will be in a similar situation at this point in time. This approach is considered to have been an appropriate one thus far; however, it expected that a more pro-active approach will be taken in future, in line with the availability of new tools and a more mature digital preservation knowledge base. The majority of the areas of improvement identified by the self-assessment have now being incorporated into Deakin's preservation strategy plan.

The next step for Deakin is to implement the practical strategies outlined in its preservation strategy plan to ensure it is best placed to take advantage of evolving practices in digital preservation.

Summary and Conclusions

Overall, the self-assessment has been a time-consuming and resource-heavy exercise, but a beneficial one, with several areas of weakness identified in DRO's setup and workflows. A strategic plan has since been developed to address these weaknesses, and thus increase the robustness of DRO's trustworthiness as a repository. It is anticipated that regular re-assessments will be undertaken at 3-yearly intervals; these are not expected to be as resource-intensive as the initial self-assessment as the relevant documentation has already been identified and located.

It is highly recommended that other libraries undertake a similar self-assessment of their repository at some stage, with the tools used and the depth of the assessment dependent on the size of the library and the level of available resources. Researchers expect their publications to be safe in institutional repositories, and repository managers need to ensure their repository meets this expectation. Self-assessment is one mechanism by which they can ascertain whether their repository is indeed trustworthy.

Based on Deakin's experience, the following suggestions are offered to repository managers who plan to undertake an ISO 16363 assessment:

- Do a self-assessment before considering paying for external certification. Certification — and re-certification — is expensive.

- Get senior management on board. Their support is essential. Digital preservation is a long-term issue.

- The individual doing the self-assessment should be reasonably familiar with the organisation's and repository's policies and procedures.

- If you don't have the time or resources to undertake an ISO 16363 assessment, consider doing an assessment against NDSA Levels of Digital Preservation (Owens, 2012).

- Set up a wiki to document the self-assessment. Do this at the start, and document findings as you go along.

- Tailor the self-assessment to risk and available time and resources.

- Determine in advance how deep the assessment will go. For example, will the assessor just collect and review documentation, or will he also check to ensure that documented procedures have been followed and everything 'under the hood' is working properly?

- Use local knowledge when gathering documentation. ISO 16363's 'suggested evidence' are possibilities only.

- Become familiar with the criteria before you start the assessment. Some documentation will be relevant to multiple criteria, so it saves time if you can identify those criteria early on.

- Remember, not all ISO 16363 criteria will be applicable to your particular situation.

- Keep up the momentum. Finishing the self-assessment does not mean the hard work is over. There will be improvements that need making. Aim to build up your repository's digital resilience over time.

- Schedule regular self-assessments.

- If you're thinking about doing an ISO 16363 self-assessment at some time in the future, start the process now. Set up a wiki page to record relevant documentation you come across in the meantime. Keep a watching brief on digital preservation issues, and update the wiki as needed to save time later on.

- Don't assume that because your repository software is OAIS-compliant, your repository itself is also. Workflows and repository setup can make or break OAIS-compliance.

- Not all ISO 16363 criteria have the same importance or risk level. Assess each criteria accordingly.

- ISO 16363 is based on a conceptual model (OAIS). Don't expect the criteria to necessarily align with your repository's particular setup and workflows.

References

[1] Center for Research Libraries, OCLC, 2007, Trustworthy repositories audit & certification (TRAC) criteria and checklist, Version 1, Centre for Research Libraries.

[2] Center for Research Libraries, 2014, TRAC and TDR checklists, Center for Research Libraries.

[3] Consultative Committee for Space Data Systems, 2011, Recommendation for space data system practices: audit and certification of trustworthy digital repositories, Recommended practice CCSDS 652.0-M-1, Magenta book, Consultative Committee for Space Data Systems.

[4] Consultative Committee for Space Data Systems, 2012, Reference model for an Open Archival Information System (OAIS): recommended practice CCSDS 652.0-M-2, CCSDS, Washington, DC.

[5] Department of Education, Science and Training, 2007, Research Quality Framework: assessing the quality and impact of research in Australia. RQF technical specifications, Commonwealth of Australia.

[6] International Organization for Standardization 2012. Space data and information transfer systems: audit and certification of trustworthy digital repositories.

[7] OpenDOAR, 2014, Growth of the OpenDOAR database — worldwide, OpenDOAR.

[8] Owens, Trevor, 2012, NDSA levels of digital preservation: release candidate one, Library of Congress.

About the Author

|

Bernadette Houghton is currently the Digitisation and Preservation Librarian at Deakin University in Geelong, Australia. She has worked with Deakin Research Online since its inception in 2007, and has a strong background in systems librarianship and cataloguing, as well as 4 years as an internal auditor. |

|

|

|

| P R I N T E R - F R I E N D L Y F O R M A T | Return to Article |